Adding NVIDIA XLIO support to libevpl: 200GbE with one TCP socket

A few weeks ago a PR popped up in the libxlio project that adds listen socket support, which makes it possible to use the NVIDIA XLIO socket API end-to-end for TCP sockets.

I was curious how efficient that would really be, so I added XLIO support to my libevpl project which aims to provide an easy to use unified API on top of all of these bespoke hardware offload technologies.

Here’s some benchmark numbers from two EPYC servers connected by 200GbE CX7 cards.

Ordinary TCP: 49.66 Gbps throughput per flow, 33.6 microseconds RTT XLIO TCP: 197.23 Gbps throughput per flow, 5.53 microseconds RTT

So that’s a factor of 4x throughput improvement and 6x latency improvement, and means we can drive a 200GbE card with just one connection and one thread. It also starts to make TCP competitive with RoCE.

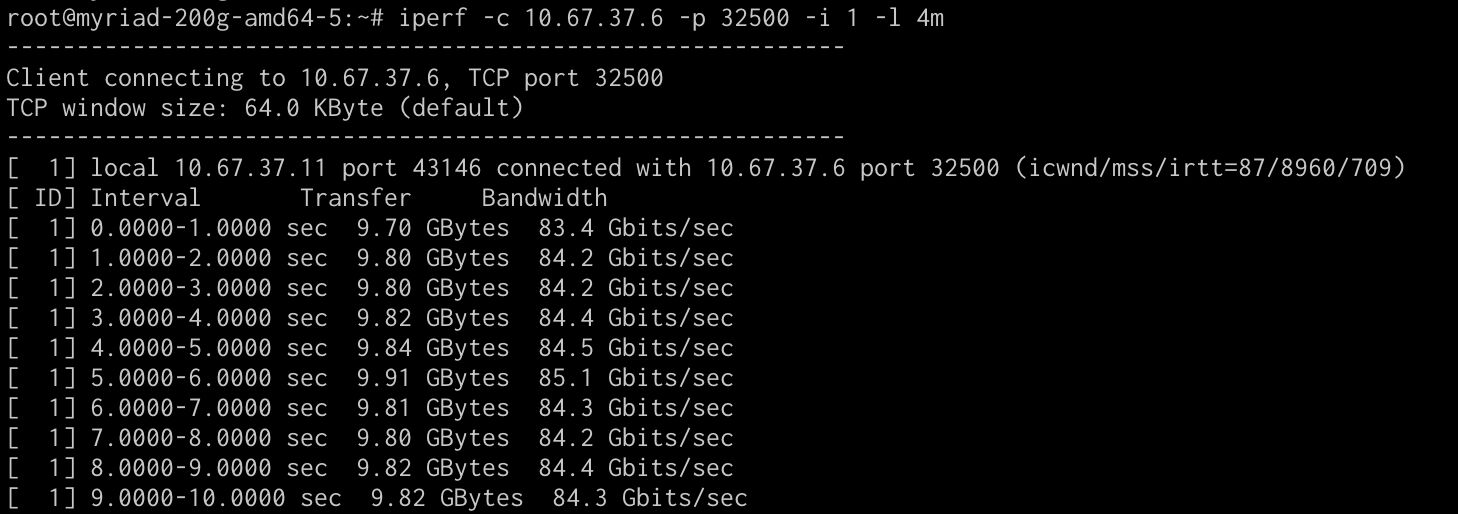

Since XLIO TCP is still TCP we can also use it only on one end of the connection and still see a substantial benefit. Plain old iperf sending data to libevpl endpoint can do 84Gbps per flow. Could be useful for, I don’t know, a NAS appliance with offload talking to NAS clients without… 😉 It will take some bake time, the NVIDIA support for this is not even merged to mainline yet…

In other news I guess io_uring zero-copy RX is now possible on Broadcom cards as of early October and that is showing similar results. Alas, I have no such cards to test.

In any case, looking forward to a world where these high speed cards are easier to put to effective use.